It seems a simple task: Just run a query that groups data the way you want it and insert it into another “longterm” database. But you don’t want or need 10-second granularity when you look weeks or months back. Most people would want to keep data for a longer period (I do as well). The right size surely depends on the hardware your InfluxDB instance runs on, so I can’t give any advice except that they should be a portion of the size of your available RAM. Check your retention policies and adjust them to throw away data when you no longer need it.

Probably the most common issue and also the most time consuming to fix, but with it you can regain up to 90% disk space. Keeping Raw Data Forever (Disk Space And Speed) Now that you’ve inspected your database, you likely have found some reasons why it may be slow. More on SHARDS and RETENTION POLICIES here:ĭashboard with internal InfluxDB metrics. Here you can find the actual files that InfluxDB stores on disk: ls -la /var/lib/influxdb/data/telegraf// # show how data is stored and evicted SHOW RETENTION POLICIES # show storage shards and groups SHOW SHARDS SHOW SHARD GROUPS Simplified, this means your data is stored in shards of 7 days and never gets evicted. The default retention policy has a duration of 0 (forever) and a shard duration of 168h, that is one week. The retention policy also defines the shard duration - basically the time duration one data shard should contain. You can have multiple retention policies on a single database. The RETENTION POLICY defines how long data should be kept in a database.

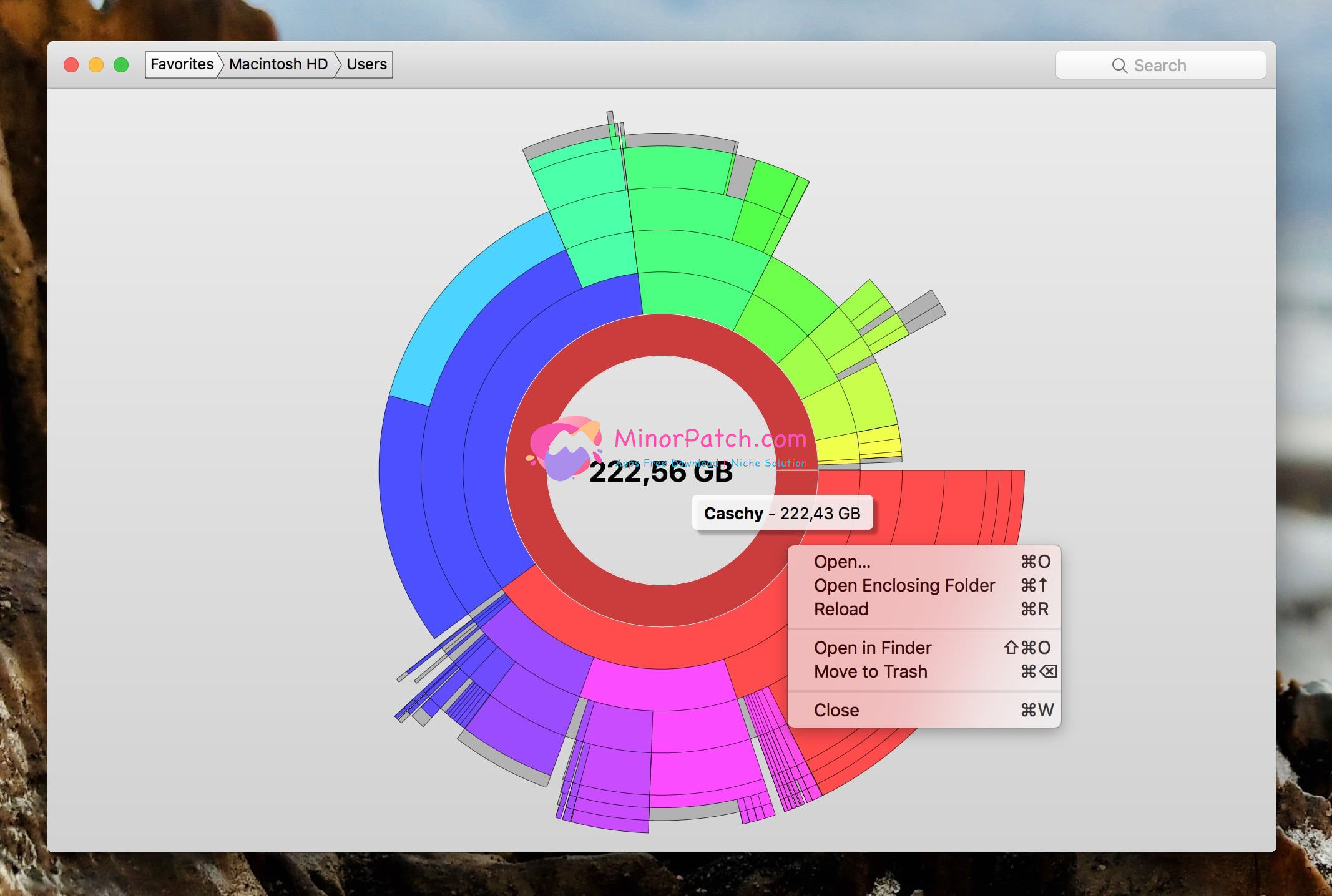

#Disk graph calc series#

# show raw data from your measurements (at any point in time) SELECT * FROM "cpu" WHERE time < ' 00:00:00' ORDER BY time DESC LIMIT 10 Some Queries To Inspect Your Data # show your measurements or how many you have SHOW MEASUREMENTS SHOW MEASUREMENT CARDINALITY # show how many tags keys you have, optionally per measurement SHOW TAG KEY CARDINALITY SHOW TAG KEY CARDINALITY FROM "cpu" # show or count different values of the tag key "host" SHOW TAG VALUES CARDINALITY WITH KEY = "host" SHOW TAG VALUES FROM "cpu" WITH KEY = "host" # show how many fields you have in measurement "cpu" SHOW FIELD KEY CARDINALITY FROM "cpu" # show how many series you have in a database SHOW SERIES CARDINALITY ON telegraf # show or count different series in measurement "cpu" SHOW SERIES CARDINALITY ON telegraf FROM "cpu" SHOW SERIES ON telegraf FROM "cpu" Explore Your Data Eviction Policies

Tipp: Use Grafanas “Explore” page to run single queries, to explore your database.Ĭheck raw data from your measurements. There are two ways to check storage and cardinality of your InfluxDB. Now let’s check what I’ve just said with your own database, assuming you’re reading this because you use InfluxDB. Besides, the times you’ll look back half a year and are interested in metrics on a 10-seconds granularity are rare, very rare. Nice! Yet after some time, the storage grows and grows as you add more metrics. So this is great, right? You start using InfluxDB and input data and nothing gets lost. If you don’t aggregate, your metrics will be stored “raw” forever. The default RETENTION POLICY of InfluxDB is “forever”, so you’ll gather millions of records. Now multiply that by the metrics you are collecting… It’s huge. Data will be stored with the precision you “enter” them.Īn example: if you collect and report a single metric every 10 seconds, it will be stored “as-is”, so you will have 6 metrics per minute. It can handle nano seconds precision, meaning you can input metrics per nanosecond. InfluxDB is a time-series database, which means it records data over time. And there is another major factor for making queries slow: cardinality. So we must understand roughly how data is stored. That’s obvious right? The more data the query has to skim through, the longer it will take. What Makes Queries Slowīefore we can optimize queries, we must understand what makes them slow, which operations or queries are expensive and what is it you actually want.

0 kommentar(er)

0 kommentar(er)